The image for analysis was provided by Dr. Ali Hadi, which he presented at the OSDFCon 2019 and was covered as a workshop at the same conference. His website contains the URL for the download location.

The first step is to find out what kind of image file it is. The file command will be run on the image file to determine the file's type. The file command examines a given file, runs several tests, and identifies what kind of file it is based on the particular signature.

The file command was run against the image files, which revealed they are EWF/Expert Witness/EnCase image file format. If you are analyzing disk images with a Linux forensic workstation, the images generally need to be in the raw format. Linux wants raw. Common forensic formats such as E01, AFF, and even split raw are not directly usable by many Linux commands. The next step is to get our E01 image into something that looks like a raw file system. We will use ewfmount from libewf to get a raw representation of the contents of the .E01 image that we can mount for analysis as shown in the figure below. If you list the content of the /mnt/evidence/master directory, you should see a file named ewf1. The file is strictly read-only. You will see a similar file in both the /mnt/evidence/slave1 and /mnt/evidence/slave2 directories.

We are now prepared to use the appropriate tools to examine our forensic image. However, we must first gather some crucial data about our forensic image in order to move forward.

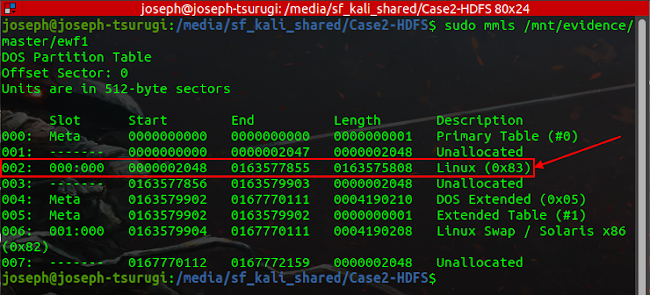

The Volume of forensic significance is highlighted above with a starting sector of 2048. It is a Linux partition as revealed by running the TSK's mmls command. Repeating the same command with the slave1 reveals the following output. The Volume of interest is highlighted below. It also has a starting sector of 2048, and it is a Linux partition.

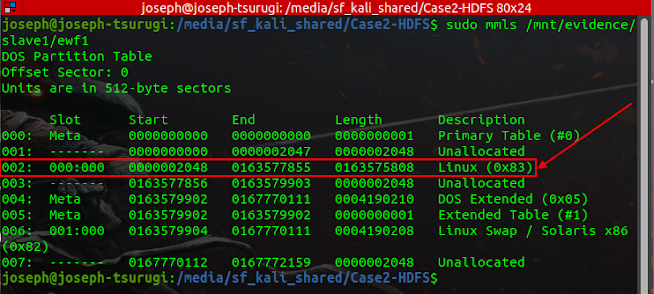

Repeating the same command with slave2 reveals the following output. The Volume of interest has also been highlighted accordingly.

We also want to obtain file system information for our respective images before we move forward.

Repeating the same command for the slave files reveals the following information.

The next step is mounting the partitions that hold the data of evidentiary value, so we can use standard Linux command-line tools to locate and extract artifacts.

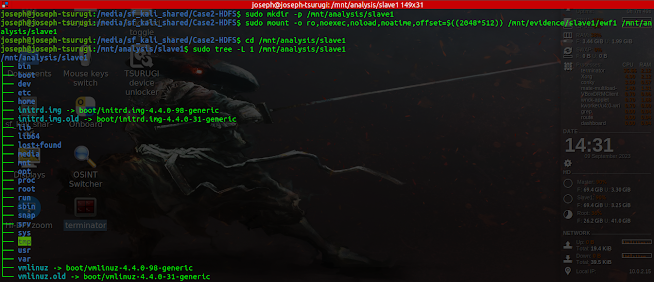

Mounting the slave1 image.

Mounting the Slave2 image.

Forensic Analysis Of The HDFS Cluster

The first step is to conduct a system enumeration of the system under investigation. Below are details about the operating system of the server.

As seen from the results of our master server above, we are dealing with an Ubuntu Linux 16.04.3 LTS code named Xenial. It is important you know the default time zone for the system you are investigating. Linux log files and other important artifacts include timestamps written in the machine's local time zone.

We may also wish to obtain hostname information of the master and slave servers.

Let us obtain information about the network configurations used on these three servers.

Repeating the same command for the Slave servers revealed the exact information shown above. Another important location to check is the /etc/hosts file, which is used to define static host lookups.

The installation date and time of the attacker machine are the next pieces of information we seek to ascertain. The dumpe2fs prints the super block and blocks group information for the filesystem on the device. First, we need to identify the device name of the / partition. Then we run the dumpe2fs command to find out the Linux Operating System (OS) Installation Date And Time.

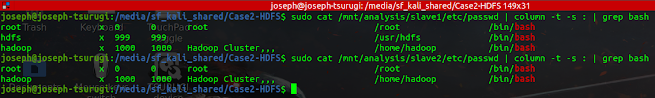

Let us find every registered user that has access to the system. This can be done by viewing the /etc/passwd file. It contains a list of all users on the system, their home directory, login shell, and UID and GID numbers. Viewing the file reveals the following.

From the result above, the users root, and hadoop have a bash shell configured. These users will be the focus of our investigation. Executing the same command for the Slave servers but concentrating on users with a configured shell revealed the following.

Now, let us obtain password information for these users by looking at the respective /etc/shadow files of the three servers. The result from viewing the master server shows that passwords exist for the user hadoop as revealed by the password hash.

The result from viewing the slave1 server shows that passwords exist for the users hadoop, and hdfs as revealed by their password hashes.

The result from viewing the slave2 server shows that passwords exist for the user hadoop as revealed by the password hash.

We also want to identify users with sudo privileges by looking at the /etc/group files of the respective servers.

From previous steps, we were able to identify that this is an Ubuntu server. Every time a package is installed using the apt or dpkg package manager, a log entry is created in the /var/log/dpkg.log file. The history of the installation activities is recorded in the /var/log/apt/history.log file So let's examine these.

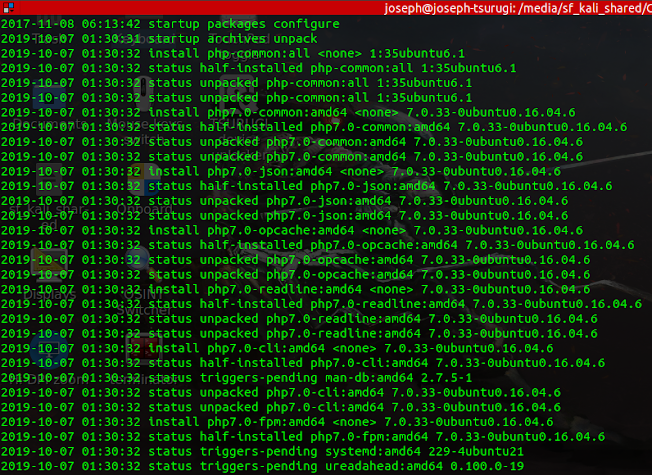

A truncated output of the content of the dpkg.log file is shown in the figure below.

A truncated output of the content of the history.log file is shown in the figure below.

From the above examinations, weird installations could be noticed. PHP and other accompanying packages were installed at the time of intrusion on 0ctober 7, 2019.

Attackers may use the normal service start-up mechanisms to restart their malware. On modern Linux systems that use Systemd, service startup configuration is found under /usr/lib/systemd/system and /etc/systemd/system. Older systems use configuration files under directories named /etc/init*. Look for recent changes to files under these directories. Note that in some cases these files may invoke other scripts that might have been modified by the attacker. This is much less obvious than the attacker modifying the start-up configuration files themselves.

Look through all these files and directories. Viewing the cluster.service file shows the following output.

Navigating to the directories highlighted above and viewing the content of the cluster.php file reveals the following - A PHP Webshell used as a systemd service.

How Did The Adversary Gain Access?

To determine how the adversary gained entry into the system, we will have to consult the relevant logs. Due to the prevalence of web server attacks, you will spend a significant portion of your DFIR career looking through web server logs. The web server logs can show when the breach happened and where the attackers came from. They might also reveal some information regarding the nature of the exploit. Critical system logs are found in the /var/log/ directory. This is largely a convention however, they could be written anywhere in the file system and you will find them in other places on other Unix-like operating systems.

The /var/log/btmp file stores failed login attempts. It is in a special binary format and can only be read with the last command as shown below. We can see multiple failed logins for root, and other strange users including hadoop, all originating from IP address 192.168.2.129 on October 6, 2019. This is suggestive of a brute-force attack.

The /var/log/wtmp file stores a record of login sessions and reboots. It is in a special binary format, so you also have to use the last command to dump out information.

Looking at the result above we can see multiple successful logins for the user hadoop with the same network address. To gain an insight into how entry was made into the system, we will have to consult the auth.log file.

Given the above result, it can be seen that the adversary gained entry via valid login credentials. In other words, the brute-force attempt was successful. Conclusively, the adversary gained entry into the Master server via a successful brute-force attack.

A good back-door approach used by attackers is leveraging existing accounts–particularly application accounts like www or mysql that are normally locked. If the attacker sets a re-usable password on these accounts, they could use them to access the system remotely. Creating an authorized_keys entry in the user’s home directory is another way of opening up access to the account.

From previous results, we were able to identify the home directories of the users with sudo privileges and had a configured shell. It is time to investigate these.

Looking at the home directory of the user hadoop in the Master server as seen in the figure above, the first thing that should trigger your curiosity is the file named 45010. It appears to be an ELF file as revealed by the command below.

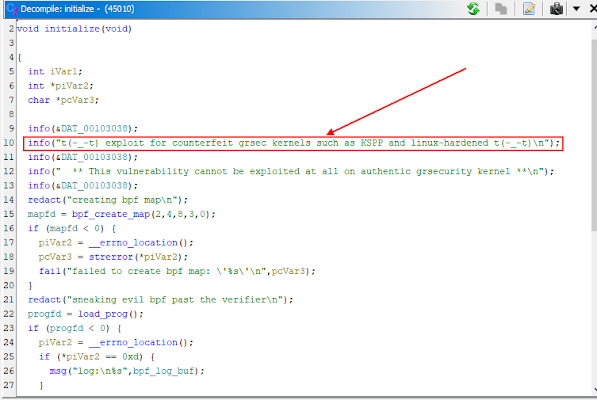

While the strings command can give an insight into what this executable file is about, I prefer to disassemble it to gain a more robust understanding of what this file does. Disassembling with Ghidra and reading every function of the executable, I stumbled upon this.

From the result of the disassembly, it is obvious that the file 45010 is the binary used by the adversary for exploiting the kernel and gaining root privileges. We should also investigate the history of commands entered by the user hadoop for some more leads.

sudo cat /mnt/analysis/master/home/hadoop/.bash_historyTo validate that entry was made into slave servers via ssh, we will have to examine the auth.log files of the respective servers. Below is the result for the Slave1 server.

sudo cat /mnt/analysis/slave/var/log/auth.log

Below is the result for the Slave2 server.

From the above results, we can conclude that the adversary used ssh to move laterally across the network. He used the ssh-keys stored at /home/hadoop/.ssh/authorized_keys of the Master server to login to the Slave1 and Slave2 servers respectively.

We may also wish to examine the history of bash commands for the Slave1 and Slave2 servers respectively to see if we could find something useful.

We can observe a deletion of the file 45010 from the temp directories of the slave1 and slave2 servers respectively. We already know what this file is and what it does from previous steps.

In summary, the following could be deduced from the examination conducted.

- Compromise was due to weak credentials - The adversary's brute-force attack was successful.

- Privilege escalation using kernel vulnerability CVE-2017-16995.

- Systemd service was installed after gaining root access.

- Lateral movement to other hosts on the network using SSH.

Post a Comment